Last update: September 25, 2025

This is a monitoring plugin to check Kubernetes clusters managed with Rancher 2.x. It uses Rancher 2's API to monitor states of clusters, nodes, workloads or pods.

Note: This plugin is not created nor officially supported by Rancher Labs nor SUSE. As with most monitoring plugins, it's the (monitoring) open source community contributing to this plugin.

If you are looking for commercial support for this monitoring plugin, need customized modifications or in general customized monitoring plugins, contact us at Infiniroot.com.

4119 downloads so far...

Download plugin and save it in your Nagios/Monitoring plugin folder (usually /usr/lib/nagios/plugins, depends on your distribution). Afterwards adjust the permissions (usually chmod 755).

Community contributions welcome on GitHub repo.

The check_rancher2 plugin was successfully tested on the following Rancher 2 versions:

# 20180629 alpha Started programming of script

# 20180713 beta1 Public release in repository

# 20180803 beta2 Check for "type", echo project name in "all workload" check, too

# 20180806 beta3 Fix important bug in for loop in workload check, check for 'paused'

# 20180906 beta4 Catch cluster not found and zero workloads in workload check

# 20180906 beta5 Fix paused check (type 'object' has no elements to extract (arg 5)

# 20180921 beta6 Added pod(s) check within a project

# 20180926 beta7 Handle a workflow in status 'updating' as warning, not critical

# 20181107 beta8 Missing pod check type in help, documentation completed

# 20181109 1.0.0 Do not alert for succeeded pods

# 20190308 1.1.0 Added node(s) check

# 20190903 1.1.1 Detect invalid hostname (non-API hostname)

# 20190903 1.2.0 Allow self-signed certificates (-s)

# 20190913 1.2.1 Detect additional redirect (308)

# 20200129 1.2.2 Fix typos in workload perfdata (#11) and single cluster health (issue #12)

# 20200523 1.2.3 Handle 403 forbidden error (issue #15)

# 20200617 1.3.0 Added ignore parameter (-i)

# 20210210 1.4.0 Checking specific workloads and pods inside a namespace

# 20210413 1.5.0 Plugin now uses jq instead of jshon, fix cluster error check (issue #19)

# 20210504 1.6.0 Add usage performance data on single cluster check, fix project check

# 20210824 1.6.1 Fix cluster and project not found error (#24)

# 20211021 1.7.0 Check for additional node (pressure) conditions (#27)

# 20211201 1.7.1 Fix cluster state detection (#26)

# 20220610 1.8.0 More performance data, long parameters, other improvements (#31)

# 20220729 1.9.0 Output improvements (#32), show workload namespace (#33)

# 20220909 1.10.0 Fix ComponentStatus (#35), show K8s version in single cluster check

# 20220909 1.10.0 Allow ignoring statuses on workload checks (#29)

# 20230110 1.11.0 Allow ignoring specific workload names, provisioning cluster not critical (#39)

# 20230202 1.12.0 Add local-certs check type

# 20231208 1.12.1 Use 'command -v' instead of 'which' for required command check

# 20250613 1.13.0 Add api-token check type, output fix in local-certs check, fix --help

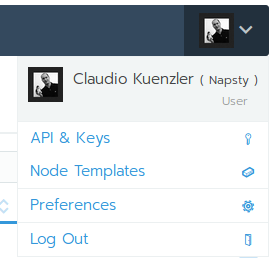

This section describes how to create an API access in Rancher 2.x. Log in to your Rancher 2 environment and on the top right corner, hover over your user icon and then click on 'API and Keys'. Starting with Rancher 2.6 this is now called 'Account & API Keys'.

You will see an overview of existing API access tokens. To create a new API access, click on the button 'Create API Key' (previously 'Add Key').

In Rancher 2.6.x and newer, make sure you create an API Key without scope! Otherwise the plugin will not work.

Rancher 2 will output the access credentials with two fields: The access key (username starting with token-) and the secret key (password). You must store these credentials in a safe place as this is the one and only time the password is shown.

You can now use these credentials with check_rancher2.

| Short | Long | Description |

| -H * | --apihost * | Hostname (DNS name) of Rancher 2 management |

| -U * | --apiuser * | Username for API access (will be in format 'token-xxxxx') |

| -P * | --apipass * | Password for API access |

| -S | --secure | Use secure connection (https) to Rancher API |

| -s | --selfsigned | Allow self-signed certificates |

| -t * | --type * | Check type; defines what kind of check you want to run |

| -c | --clustername | Cluster name (for specific cluster check) |

| -p | --projectname | Project ID in format c-xxxxx:p-xxxxx (for specific project check, needed for workload checks) |

| -n | --namespacename | Namespace name (optional for specific workload, required for specific pod check) |

| -w | --workloadname | Workload name (needed for specific workload checks) |

| -o | --podname | Pod name (needed for specific pod checks, this makes only sense if you have pods with static names) |

| -i | --ignore | Comma-separated list of status(es) to ignore ('node' and 'workload' check types), specific workload name(s) to ignore ('workload' check type) or certificate to ignore ('local-certs' check type) |

| N/A | --cpu-warn | Exit with WARNING status if more than PERCENT of cpu capacity is used (supported check types: node, cluster) |

| N/A | --cpu-crit | Exit with CRITICAL status if more than PERCENT of cpu capacity is used (supported check types: node, cluster) |

| N/A | --memory-warn | Exit with WARNING status if more than PERCENT of mem capacity is used (supported check types: node, cluster) |

| N/A | --memory-crit | Exit with CRITICAL status if more than PERCENT of mem capacity is used (supported check types: node, cluster) |

| N/A | --pods-warn | Exit with WARNING status if more than PERCENT of pod capacity is used (supported check types: node, cluster) |

| N/A | --pods-crit | Exit with CRITICAL status if more than PERCENT of pod capacity is used (supported check types: node, cluster) |

| N/A | --cert-warn | DEPRECATED since 1.13.0, please use --expiry-warn from now on |

| N/A | --expiry-warn | Warning threshold in days to warn before a Rancher internal certificate or the API token expires (supported check types: local-certs, api-token) |

| -h | --help | Show help and usage |

* mandatory parameter

| Type | Description |

| info | Informs about available clusters and projects and their API ID's. These ID's are needed for specific checks. |

| cluster | Checks the current status of all clusters or of a specific cluster (defined with -c clusterid) |

| node | Checks the current status of all nodes or nodes of a specific cluster (defined with -c clusterid) |

| project | Checks the current status of all projects or of a specific project (defined with -p projectid) |

| workload | Checks the current status of all or a specific (-w workloadname) workload within a project (-p projectid must be set!) and optional within a namespace (-n namespace) |

| pod | Checks the current status of all or a specific (-o podname) pod within a project (-p projectid must be set!). A specific pod check can be used with -o podname, however this also requires the namespace (-n namespace). Note: A specific pod check makes only sense if you use pods with static names (this is rather rare) |

| local-certs | Checks the current status of all internal Rancher certificates (e.g. rancher-webhook) in local cluster under the System project (namespace: cattle-system). Use --expiry-warn to be alerted prior the expiry date. |

| api-token | Checks the expiry of the used API token. Use --expiry-warn to be alerted prior the expiry date. |

Usage:

./check_rancher2.sh -H hostname -U token -P password [-S] [-s] -t checktype [-p string] [-o string] [-n string] [-w string]

Example: Show information about discovered clusters:

./check_rancher2.sh -H rancher2.example.com -S -U token-xxxxx -P aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai -t info

CHECK_RANCHER2 OK - Found 3 clusters: "c-5f7k2" alias "gamma-stage" - "c-scsb6" alias "hugo-stage" - "local" alias "local" - and 6 projects: "c-5f7k2:p-4fdsd" alias "Gamma" - "c-5f7k2:p-cngpb" alias "System" - "c-scsb6:p-cxk9s" alias "hugo-stage" - "c-scsb6:p-dqxdx" alias "System" - "local:p-9rwc7" alias "Default" - "local:p-ls96l" alias "System" -|'clusters'=3;;;; 'projects'=6;;;;

Example: Check all pods within a project (c-5f7k2:p-4fdsd):

./check_rancher2.sh -H rancher2.example.com -S -U token-xxxxx -P aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai -t pod -p c-5f7k2:p-4fdsd

CHECK_RANCHER2 OK - All pods (85) in project c-5f7k2:p-4fdsd are running|'pods_total'=85;;;; 'pods_errors'=0;;;;

Example: Single pod check within a project (c-5f7k2:p-4fdsd) and namespace (gamma):

./check_rancher2.sh -H rancher2.example.com -S -U token-xxxxx -P aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai -t pod -p c-5f7k2:p-4fdsd -n gamma -o nginx-85789c55b6-625tz

CHECK_RANCHER2 OK - Pod nginx-85789c55b6-625tz is running|'pod_active'=1;;;; 'pod_error'=0;;;;

Example: Monitor all nodes across all clusters:

./check_rancher2.sh -H rancher2.example.com -S -U token-xxxxx -P aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai -t node

CHECK_RANCHER2 OK - All 73 nodes are active|'nodes_total'=73;;;; 'node_errors'=0;;;; 'node_ignored'=0;;;; 'nodes_cpu_total'=98149;;;0;372000 'nodes_memory_total'=211285966848B;;;0;1321235513344 'nodes_pods_total'=1431;;;0;8030

Example: Monitor all nodes across all clusters but ignore cordoned and drained nodes:

./check_rancher2.sh -H rancher2.example.com -S -U token-xxxxx -P aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai -t node -i "cordoned,drained"

CHECK_RANCHER2 OK - All nodes OK - Info: 1 node errors ignored|'nodes_total'=17;;;; 'node_errors'=0;;;; 'node_ignored'=1;;;; 'nodes_cpu_total'=23374;;;0;48000 'nodes_memory_total'=30635196416B;;;0;130837262336 'nodes_pods_total'=497;;;0;1870

node12 in cluster c-xxxxx is drained but ignored

Example: Monitor all clusters:

./check_rancher2.sh -H rancher2.example.com -S -U token-xxxxx -P aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai -t cluster

CHECK_RANCHER2 OK - All clusters (5) are healthy|'clusters_total'=5;;;; 'clusters_errors'=0;;;;

Example: Monitor a single cluster (note the performance data here):

./check_rancher2.sh -H rancher2.example.com -S -U token-xxxxx -P aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai -t cluster -c c-xxxxx

CHECK_RANCHER2 OK - Cluster my-test is healthy|'cluster_healthy'=1;;;; 'component_errors'=0;;;; 'cpu'=2380;;;;4000 'memory'=1572864000B;;;0;12469846016 'pods'=55;;;;220 'usage_cpu'=59%;;;0;100 'usage_memory'=12%;;;0;100 'usage_pods'=25%

Example: Monitor Rancher internal certificates:

./check_rancher2.sh -H rancher2.example.com -S -U token-xxxxx -P aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai -t local-certs

CHECK_RANCHER2 CRITICAL - 1 certificate(s) expired (tls-rancher-internal expired 71 days ago -) |'total_certs'=6;;;; 'expired_certs'=1;;;; 'warning_certs'=0;;;; 'ignored_certs'=0;;;;

Example: Monitor Rancher internal certificates but ignore a specific certificate:

./check_rancher2.sh -H rancher2.example.com -S -U token-xxxxx -P aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai -t local-certs -i "tls-rancher-internal"

CHECK_RANCHER2 OK - All 6 certificates are valid - 1 certificate(s) ignored: tls-rancher-internal|'total_certs'=6;;;; 'expired_certs'=0;;;; 'warning_certs'=0;;;; 'ignored_certs'=1;;;;

# check_rancher2 command definition

define command{

command_name check_rancher2

command_line $USER1$/check_rancher2.sh -H $HOSTADDRESS$ -S -U $ARG1$ -P $ARG2$ -t $ARG3$ $ARG4$

}

Note: HTTPS is used in this case (-S). All mandatory parameters are fixed defined. The optional parameters can be added inside $ARG4$ (e.g. -c clustername).

![]() Important: Using $HOSTADDRESS$ as -H value assumes that you have created a host object which uses the Rancher2 DNS name as address.

Important: Using $HOSTADDRESS$ as -H value assumes that you have created a host object which uses the Rancher2 DNS name as address.

Also see CheckCommand object in GitHub repository

# check_rancher2 command definition

object CheckCommand "check_rancher2" {

import "plugin-check-command"

command = [ PluginDir + "/check_rancher2.sh" ]

arguments = {

"-H" = "$rancher2_address$"

"-U" = "$rancher2_username$"

"-P" = "$rancher2_password$"

"-S" = { set_if = "$rancher2_ssl$" }

"-t" = "$rancher2_type$"

"-c" = "$rancher2_cluster$"

"-p" = "$rancher2_project$"

"-n" = "$rancher2_namespace$"

"-w" = "$rancher2_workload$"

"-o" = "$rancher2_pod$"

"-i" = "$rancher2_ignore_status$"

"--cpu-warn" = "$rancher2_cpu_warn$"

"--cpu-crit" = "$rancher2_cpu_crit$"

"--memory-warn" = "$rancher2_memory_warn$"

"--memory-crit" = "$rancher2_memory_crit$"

"--pods-warn" = "$rancher2_pods_warn$"

"--pods-crit" = "$rancher2_pods_crit$"

"--expiry-warn" = "$rancher2_expiry_warn$"

}

vars.rancher2_address = "$address$"

vars.rancher2_cert_warn = "14"

# If you only run one Rancher2, you can define api access here, too:

#vars.rancher2_username = "token-xxxxx"

#vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

#vars.rancher2_ssl = true

}

Show Rancher 2 info:

# Check Rancher 2 Pods in project c-5f7k2:p-4fdsd

define service{

use generic-service

host_name my-rancher2-host

service_description Rancher2 Info

check_command check_rancher2!token-xxxxx!aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai!info

}

Check all pods in a project:

# Check Rancher 2 Pods in project c-5f7k2:p-4fdsd

define service{

use generic-service

host_name my-rancher2-host

service_description Rancher2 Project 1 Pods

check_command check_rancher2!token-xxxxx!aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai!pod!-c c-5f7k2:p-4fdsd

}

Information about discovered clusters and projects:

# Just show some info about discovered clusters and projects

object Service "Rancher2 Info" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "info"

}

Check all available/found clusters for their health:

# Check all available/found clusters for their health

object Service "Rancher2 All Clusters" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "cluster"

}

Check a single cluster for its health:

# Check a single cluster for its health

object Service "Rancher2 Cluster Test" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "cluster"

vars.rancher2_cluster = "c-5f7k2"

}

Check all nodes of a single cluster for their health but ignore cordoned and drained statuses:

# Check nodes in cluster c-5f7k2 (ignore status cordoned and drained)

object Service "Rancher2 Nodes in Cluster c-5f7k2" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "node"

vars.rancher2_cluster = "c-5f7k2"

vars.rancher2_ignore_status = "cordoned,drained"

}

Check all available/found projects (across all clusters) for their health:

# Check all available/found projects (across all clusters) for their health

object Service "Rancher2 All Projects" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "project"

}

Check a single project:

# Check a single projects

object Service "Rancher2 Project Test" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "project"

vars.rancher2_project = "c-5f7k2:p-4fdsd"

}

Check all workloads in a certain project:

# Check all workloads in a certain project

object Service "Rancher2 Workloads in Project Test" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "workload"

vars.rancher2_project = "c-5f7k2:p-4fdsd"

}

Check a single workload in a certain project:

# Check a single workload in a certain project

object Service "Rancher2 Workload Web in Project Test" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "workload"

vars.rancher2_project = "c-5f7k2:p-4fdsd"

vars.rancher2_workload = "Web"

}

Check all pods in a certain project:

# Check all pods in a certain project

object Service "Rancher2 Pods in Project Test" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "pod"

vars.rancher2_project = "c-5f7k2:p-4fdsd"

}

Check a single pod in a certain project and namespace:

# Check a single pod in a certain project and namespace

object Service "Rancher2 Pod Nginx1 in Project Test Namespace Test" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "pod"

vars.rancher2_project = "c-5f7k2:p-4fdsd"

vars.rancher2_namespace = "test"

vars.rancher2_pod = "nginx1"

}

Check internal certificates deployed in Rancher in "local" cluster:

# Check internal certificates in local cluster

object Service "Rancher2 internal certificates (cattle-system)" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "local-certs"

vars.rancher2_expiry_warn = "30"

}

Check API Token used for Rancher2 monitoring:

# Check API token

object Service "Rancher2 API Token" {

import "generic-service"

host_name = "my-rancher2-host"

check_command = "check_rancher2"

vars.rancher2_username = "token-xxxxx"

vars.rancher2_password = "aethooFaaGohthah8aezup5wiew5aedainooG2goh9Kaeti9hurolai"

vars.rancher2_ssl = true

vars.rancher2_type = "api-token"

vars.rancher2_expiry_warn = "7"

}

The monitoring plugin check_rancher2 was presented and introduced at the Open Source Monitoring Conference (OSMC) 2018 in Nuremberg, Germany. You can download the presentation as PDF document or watch the recorded video online.