Whenever you are using a MySQL replication (whether this is on a MySQL, MariaDB or Percona installation), you should be monitoring them. The open source monitoring plugin check_mysql_slavestatus does this job perfectly and will alert you when the replication stopped (for whatever reason).

This has happened a couple of days ago and monitoring correctly informed about a stopped replication on the slave server (inf-mysql02-p):

CRITICAL: Slave_SQL_Running: No

A first analysis quickly showed the physical server of this virtual slave server had crashed and the sudden power loss corrupted the replication:

root@inf-mysql02-p:~# uptime

10:12:17 up 1 day, 13:52, 1 user, load average: 0.24, 0.24, 0.25

The uptime should be more than 1 day, just saying ;-).

At the next MariaDB start up, the replication tried to continue but then ran into an error. The MySQL error log clearly shows this:

root@inf-mysql02-p:~# cat /var/log/mysql/error.log

2023-07-22 20:19:59 0 [Note] Starting MariaDB 10.6.12-MariaDB-0ubuntu0.22.04.1-log source revision as process 246

2023-07-22 20:20:00 0 [Note] mariadbd: Aria engine: starting recovery

recovered pages: 0% 10% 20% 32% 43% 53% 63% 73% 83% 93% 100% (0.0 seconds); tables to flush: 3 2 1 0

(0.0 seconds);

2023-07-22 20:20:00 0 [Note] mariadbd: Aria engine: recovery done

2023-07-22 20:20:00 0 [Note] InnoDB: Compressed tables use zlib 1.2.11

2023-07-22 20:20:00 0 [Note] InnoDB: Number of pools: 1

2023-07-22 20:20:00 0 [Note] InnoDB: Using crc32 + pclmulqdq instructions

2023-07-22 20:20:00 0 [Note] InnoDB: Initializing buffer pool, total size = 2147483648, chunk size = 134217728

2023-07-22 20:20:00 0 [Note] InnoDB: Completed initialization of buffer pool

2023-07-22 20:20:00 0 [Note] InnoDB: Starting crash recovery from checkpoint LSN=110224548081,110225247704

2023-07-22 20:20:04 0 [Note] InnoDB: Starting final batch to recover 27522 pages from redo log.

2023-07-22 20:20:08 0 [Note] InnoDB: 128 rollback segments are active.

2023-07-22 20:20:08 0 [Note] InnoDB: Removed temporary tablespace data file: "./ibtmp1"

2023-07-22 20:20:08 0 [Note] InnoDB: Creating shared tablespace for temporary tables

2023-07-22 20:20:08 0 [Note] InnoDB: Setting file './ibtmp1' size to 12 MB. Physically writing the file full; Please wait ...

2023-07-22 20:20:08 0 [Note] InnoDB: File './ibtmp1' size is now 12 MB.

2023-07-22 20:20:08 0 [Note] InnoDB: 10.6.12 started; log sequence number 110541786264; transaction id 18208946

2023-07-22 20:20:08 0 [Note] InnoDB: Loading buffer pool(s) from /var/lib/mysql/ib_buffer_pool

2023-07-22 20:20:08 0 [Note] Plugin 'FEEDBACK' is disabled.

2023-07-22 20:20:08 0 [Note] InnoDB: Buffer pool(s) load completed at 230722 20:20:08

2023-07-22 20:20:08 0 [Warning] 'innodb-locks-unsafe-for-binlog' was removed. It does nothing now and exists only for compatibility with old my.cnf files.

2023-07-22 20:20:08 0 [Note] Recovering after a crash using /var/log/mysql/mysql-bin

2023-07-22 20:20:08 0 [Note] Starting table crash recovery...

2023-07-22 20:20:08 0 [Note] Crash table recovery finished.

2023-07-22 20:20:08 0 [Note] Server socket created on IP: '0.0.0.0'.

2023-07-22 20:20:08 0 [Warning] Neither --relay-log nor --relay-log-index were used; so replication may break when this MariaDB server acts as a replica and has its hostname changed. Please use '--log-basename=#' or '--relay-log=mysqld-relay-bin' to avoid this problem.

2023-07-22 20:20:08 5 [Note] Slave I/O thread: Start asynchronous replication to master 'repl@10.10.76.161:3306' in log 'mysql-bin.004699' at position 132501043

2023-07-22 20:20:08 6 [Note] Slave SQL thread initialized, starting replication in log 'mysql-bin.004699' at position 132501043, relay log './mysqld-relay-bin.013738' position: 132239277

2023-07-22 20:20:08 0 [Note] /usr/sbin/mariadbd: ready for connections.

Version: '10.6.12-MariaDB-0ubuntu0.22.04.1-log' socket: '/run/mysqld/mysqld.sock' port: 3306 Ubuntu 22.04

2023-07-22 20:20:08 6 [ERROR] Error in Log_event::read_log_event(): 'Event invalid', data_len: 0, event_type: 0

2023-07-22 20:20:08 6 [ERROR] Error reading relay log event: slave SQL thread aborted because of I/O error

2023-07-22 20:20:08 6 [ERROR] Slave SQL: Relay log read failure: Could not parse relay log event entry. The possible reasons are: the master's binary log is corrupted (you can check this by running 'mysqlbinlog' on the binary log), the slave's relay log is corrupted (you can check this by running 'mysqlbinlog' on the relay log), a network problem, or a bug in the master's or slave's MariaDB code. If you want to check the master's binary log or slave's relay log, you will be able to know their names by issuing 'SHOW SLAVE STATUS' on this slave. Internal MariaDB error code: 1594

2023-07-22 20:20:08 6 [ERROR] Error running query, slave SQL thread aborted. Fix the problem, and restart the slave SQL thread with "SLAVE START". We stopped at log 'mysql-bin.004699' position 132501043

2023-07-22 20:20:08 6 [Note] Slave SQL thread exiting, replication stopped in log 'mysql-bin.004699' at position 132501043, master: 10.10.76.161:3306

2023-07-22 20:20:08 5 [Note] Slave I/O thread: connected to master 'repl@10.10.76.161:3306',replication started in log 'mysql-bin.004699' at position 132501043

The Error in Log_event::read_log_event() indicates that the replicated event could not be read from the relay log.

Let's stop the slave process and also take a look at the current slave status:

MariaDB [(none)]> STOP SLAVE;

Query OK, 0 rows affected (0.005 sec)

MariaDB [(none)]> SHOW SLAVE STATUS\G;

*************************** 1. row ***************************

Slave_IO_State:

Master_Host: 10.10.76.161

Master_User: repl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.004861

Read_Master_Log_Pos: 29553382

Relay_Log_File: mysqld-relay-bin.013738

Relay_Log_Pos: 132239277

Relay_Master_Log_File: mysql-bin.004699

Slave_IO_Running: No

Slave_SQL_Running: No

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 1594

Last_Error: Relay log read failure: Could not parse relay log event entry. The possible reasons are: the master's binary log is corrupted (you can check this by running 'mysqlbinlog' on the binary log), the slave's relay log is corrupted (you can check this by running 'mysqlbinlog' on the relay log), a network problem, or a bug in the master's or slave's MariaDB code. If you want to check the master's binary log or slave's relay log, you will be able to know their names by issuing 'SHOW SLAVE STATUS' on this slave.

Skip_Counter: 0

Exec_Master_Log_Pos: 132501043

Relay_Log_Space: 22250692688

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: NULL

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 1594

Last_SQL_Error: Relay log read failure: Could not parse relay log event entry. The possible reasons are: the master's binary log is corrupted (you can check this by running 'mysqlbinlog' on the binary log), the slave's relay log is corrupted (you can check this by running 'mysqlbinlog' on the relay log), a network problem, or a bug in the master's or slave's MariaDB code. If you want to check the master's binary log or slave's relay log, you will be able to know their names by issuing 'SHOW SLAVE STATUS' on this slave.

Replicate_Ignore_Server_Ids:

Master_Server_Id: 1

Master_SSL_Crl:

Master_SSL_Crlpath:

Using_Gtid: No

Gtid_IO_Pos:

Replicate_Do_Domain_Ids:

Replicate_Ignore_Domain_Ids:

Parallel_Mode: optimistic

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State:

Slave_DDL_Groups: 0

Slave_Non_Transactional_Groups: 0

Slave_Transactional_Groups: 0

1 row in set (0.000 sec)

The output shows the same SQL error as seen in the log file.

To verify a problem in the local (replicated) binary log file, we can parse the relay log (mysqld-relay-bin.013738, as seen in the error log and SHOW SLAVE STATUS output above) using the mysqlbinlog command. By grepping for capital ERROR the file can be checked for actual errors or corruption:

root@inf-mysql02-p:~# mysqlbinlog /var/lib/mysql/mysqld-relay-bin.013738 | grep ERROR

ERROR: Error in Log_event::read_log_event(): 'Event invalid', data_len: 0, event_type: 0

ERROR: Could not read entry at offset 132239277: Error in log format or read error.

Indeed there are errors in this local bin log. And - no surprise - the error matches the log position also seen in the slave status above.

Luckily the crash only happened on the slave, the master did not suffer from any crash and has therefore consistent data (this can also be manually verified by running mysqlbinlog on the binary logs on the master, grepping for the string ERROR). This means we can fix this broken replication on the slave server by "re-executing" the events before the corruption!

In an older article, I explained how to do a full MySQL replication re-sync when a master-slave replication needs to be rebuilt. A similar approach is used here, by defining the master's binary log (file name) and position.

We require the following information, which we can extract from above's SHOW SLAVE STATUS command:

This is basically the information (hopefully) still available on the MariaDB master server where the replication stopped working.

We can now reset the slave and change the replication "position":

mysql> RESET SLAVE;

Query OK, 0 rows affected (0.04 sec)

MariaDB [(none)]> CHANGE MASTER TO MASTER_LOG_FILE='mysql-bin.004699', MASTER_LOG_POS=132501043;

Query OK, 0 rows affected (0.019 sec)

MariaDB [(none)]> START SLAVE;

Query OK, 0 rows affected (0.000 sec)

As the replication "goes back in time" and re-executes events before the hardware crash, some errors might appear whie looking at the SHOW SLAVE STATUS. Such a typical error is the following:

Last_Error: Could not execute Write_rows_v1 event on table app.background_worker_run_info; Duplicate entry '32570d3b-5877-4730-b13b-53a2ad9dce4e' for key 'PRIMARY', Error_code: 1062; handler error HA_ERR_FOUND_DUPP_KEY; the event's master log mysql-bin.004699, end_log_pos 132502035

As this write event already occurred, the unique primary key is already in place. This write error can therefore safely be ignored:

MariaDB [(none)]> STOP SLAVE ; SET GLOBAL sql_slave_skip_counter = 1; START SLAVE;

There might be a couple of the same DUPLICATE KEY errors. Re-apply the command above until the DUPLICATE KEY errors are gone (in my case I needed to do this 4 times).

Once the DUPLICATE KEY errors were cleared, the replication started working again:

MariaDB [(none)]> SHOW SLAVE STATUS\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 10.10.76.161

Master_User: repl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.004827

Read_Master_Log_Pos: 117549478

Relay_Log_File: mysqld-relay-bin.000010

Relay_Log_Pos: 4897978

Relay_Master_Log_File: mysql-bin.004702

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 4907180

Relay_Log_Space: 17260963132

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 136974

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 1

Master_SSL_Crl:

Master_SSL_Crlpath:

Using_Gtid: No

Gtid_IO_Pos:

Replicate_Do_Domain_Ids:

Replicate_Ignore_Domain_Ids:

Parallel_Mode: optimistic

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Write_rows_log_event::write_row(-1) on table `background_worker_run_info`

Slave_DDL_Groups: 0

Slave_Non_Transactional_Groups: 0

Slave_Transactional_Groups: 1141

1 row in set (0.000 sec)

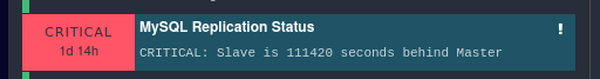

The slave now needs to catch up (Seconds_Behind_Master) and was also seen by monitoring:

Eventually the slave caught up with the master a few minutes later.

MariaDB [(none)]> SHOW SLAVE STATUS\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 10.10.76.161

Master_User: repl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.004864

Read_Master_Log_Pos: 107935154

Relay_Log_File: mysqld-relay-bin.000495

Relay_Log_Pos: 107736336

Relay_Master_Log_File: mysql-bin.004864

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

[...]

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Slave_DDL_Groups: 0

Slave_Non_Transactional_Groups: 0

Slave_Transactional_Groups: 95609

1 row in set (0.000 sec)

No comments yet.

AWS Android Ansible Apache Apple Atlassian BSD Backup Bash Bluecoat CMS Chef Cloud Coding Consul Containers CouchDB DB DNS Databases Docker ELK Elasticsearch Filebeat FreeBSD Galera Git GlusterFS Grafana Graphics HAProxy HTML Hacks Hardware Icinga Influx Internet Java KVM Kibana Kodi Kubernetes LVM LXC Linux Logstash Mac Macintosh Mail MariaDB Minio MongoDB Monitoring Multimedia MySQL NFS Nagios Network Nginx OSSEC OTRS Observability Office OpenSearch PHP Perl Personal PostgreSQL PowerDNS Proxmox Proxy Python Rancher Rant Redis Roundcube SSL Samba Seafile Security Shell SmartOS Solaris Surveillance Systemd TLS Tomcat Ubuntu Unix VMware Varnish Virtualization Windows Wireless Wordpress Wyse ZFS Zoneminder