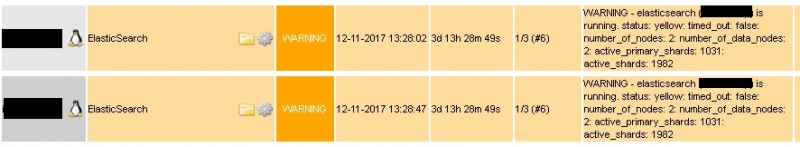

Our monitoring informed about a yellow ElasticSearch status on our internal ELK cluster:

I manually checked the cluster health and indeed, 80 unassigned shards are shown:

# curl "http://es01.example.com:9200/_cluster/health?pretty&human"

{

"cluster_name" : "escluster",

"status" : "yellow",

"timed_out" : false,

"number_of_nodes" : 2,

"number_of_data_nodes" : 2,

"active_primary_shards" : 1031,

"active_shards" : 1982,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 80,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue" : "0s",

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent" : "96.1%",

"active_shards_percent_as_number" : 96.12027158098934

}

In the ElasticSearch logs such entries can be found:

[2017-12-11T13:42:30,362][INFO ][o.e.c.r.a.DiskThresholdMonitor] [es01] low disk watermark [85%] exceeded on [t3GAvhY1SS2xZkt4U389jw][es02][/var/lib/elasticsearch/nodes/0] free: 222gb[12.2%], replicas will not be assigned to this node

A quick check on the disk space of the ES01 node revealed that 89% are currently used:

DISK OK - free space: /var/lib/elasticsearch 222407 MB (11% inode=99%):

That's OK for our monitoring (warning starting at 90%) but ElasticSearch itself also runs an internal monitoring. If the disk usage is 85% or higher, it will stop the shard allocation.

From the ElasticSearch documentation:

cluster.routing.allocation.disk.watermark.low

Controls the low watermark for disk usage. It defaults to 85%, meaning ES will not allocate new shards to nodes once they have more than 85% disk used.

As this is quite a big partition of 1.8TB, I decided to increase the watermark.low to 95%. I modified /etc/elasticsearch/elasticsearch.yml and added at the end:

# tail /etc/elasticsearch/elasticsearch.yml

# ---------------------------------- Various -----------------------------------

#

# Require explicit names when deleting indices:

#

#action.destructive_requires_name: true

# 20171211 Claudio: Set higher disk threshold (default is 85%)

cluster.routing.allocation.disk.watermark.low: "95%"

# 20171211 Claudio: Do not relocate shards to another node (default is true)

cluster.routing.allocation.disk.include_relocations: false

As you can see I also set cluster.routing.allocation.disk.include_relocations to false. In our setup we have a two-node Elasticsearch cluster, both with the exact same size. They should use the same disk usage, so it doesn't make sense if ElasticSearch starts to move shards from one node to the other when almost no disk space is available anymore (hitting the watermark.high value).

After the config modifications, Elasticsearch was restarted:

# systemctl restart elasticsearch

It took quite some time for reindexing, but then all shards were assigned again.

Note: This can and should be done online and without having to restarting ElasticSearch:

# curl -X PUT "http://es01.example.com:9200/_cluster/settings" -d '{ "transient": { "cluster.routing.allocation.disk.watermark.low": "95%", "cluster.routing.allocation.disk.include_relocations": "false" } }'

Claudio Kuenzler from Sweden wrote on Apr 14th, 2019:

Hi arefe. You would have to check the Dockerfile where your Elasticsearch takes the settings from. On production environment I strongly suggest not to use Elasticsearch (and any other stateful application) in a Docker container. Just this example for troubleshooting is a hint why.

arefe from wrote on Apr 14th, 2019:

thank you from you help ,but

i have question how can i access to elasticsearch.yml ?

im usng elastic on docker , i dont have this file on

usr/share/elasticsearch/config or in etc!

AWS Android Ansible Apache Apple Atlassian BSD Backup Bash Bluecoat CMS Chef Cloud Coding Consul Containers CouchDB DB DNS Databases Docker ELK Elasticsearch Filebeat FreeBSD Galera Git GlusterFS Grafana Graphics HAProxy HTML Hacks Hardware Icinga Influx Internet Java KVM Kibana Kodi Kubernetes LVM LXC Linux Logstash Mac Macintosh Mail MariaDB Minio MongoDB Monitoring Multimedia MySQL NFS Nagios Network Nginx OSSEC OTRS Observability Office OpenSearch PHP Perl Personal PostgreSQL PowerDNS Proxmox Proxy Python Rancher Rant Redis Roundcube SSL Samba Seafile Security Shell SmartOS Solaris Surveillance Systemd TLS Tomcat Ubuntu Unix VMware Varnish Virtualization Windows Wireless Wordpress Wyse ZFS Zoneminder