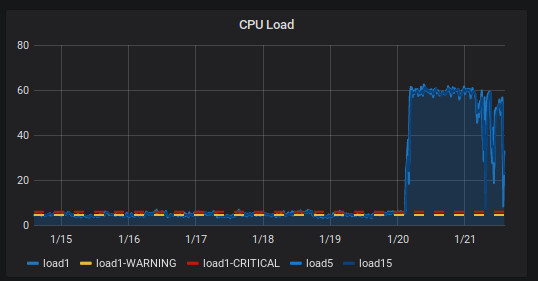

Since this weekend we experienced a very high load on the Icinga 2 monitoring server, running Icinga 2 version 2.6:

Restarts of Icinga2 didn't help. And it became worse: Icinga2 became so slow, we experienced outages between master and satellite servers and also in the user interface (classicui in this case) experienced status outages:

In the application log (/var/log/icinga2/icinga2.log) I came across a lot of such errors I haven't seen before:

[2019-01-21 14:06:59 +0100] critical/Socket: send() failed with error code 32, "Broken pipe"

[2019-01-21 14:06:59 +0100] critical/LivestatusQuery: Cannot write to TCP socket.

[2019-01-21 14:06:59 +0100] critical/LivestatusQuery: Cannot write query response to socket.

[2019-01-21 14:06:59 +0100] critical/ThreadPool: Exception thrown in event handler:

Error: Tried to read from closed socket.

(0) libbase.so.2.6.1:

(1) libbase.so.2.6.1:

(2) libbase.so.2.6.1: icinga::NetworkStream::Read(void*, unsigned long, bool) (+0x7e) [0x2b503cfa343e]

(3) libbase.so.2.6.1: icinga::StreamReadContext::FillFromStream(boost::intrusive_ptr

(4) libbase.so.2.6.1: icinga::Stream::ReadLine(icinga::String*, icinga::StreamReadContext&, bool) (+0x5c) [0x2b503cfb3bbc]

(5) liblivestatus.so.2.6.1: icinga::LivestatusListener::ClientHandler(boost::intrusive_ptr

(6) libbase.so.2.6.1: icinga::ThreadPool::WorkerThread::ThreadProc(icinga::ThreadPool::Queue&) (+0x328) [0x2b503cfe9f78]

(7) libboost_thread.so.1.54.0:

(8) libpthread.so.0:

(9) libc.so.6: clone (+0x6d) [0x2b503de51bed]

These errors started on January 20th at 02:58:

root@icingahost:/ # zgrep critical icinga2.log-20190120.gz |more

[2019-01-20 02:47:53 +0100] critical/ApiListener: Client TLS handshake failed (from [satellite]:55543)

[2019-01-20 02:52:53 +0100] critical/ApiListener: Client TLS handshake failed (from [satellite]:57401)

[2019-01-20 02:57:53 +0100] critical/ApiListener: Client TLS handshake failed (from [satellite]:59271)

[2019-01-20 02:58:50 +0100] critical/Socket: send() failed with error code 32, "Broken pipe"

[2019-01-20 02:58:50 +0100] critical/LivestatusQuery: Cannot write to TCP socket.

[2019-01-20 02:58:50 +0100] critical/LivestatusQuery: Cannot write query response to socket.

[2019-01-20 02:58:50 +0100] critical/ThreadPool: Exception thrown in event handler:

[2019-01-20 02:59:05 +0100] critical/Socket: send() failed with error code 32, "Broken pipe"

[2019-01-20 02:59:05 +0100] critical/LivestatusQuery: Cannot write to TCP socket.

[2019-01-20 02:59:05 +0100] critical/LivestatusQuery: Cannot write query response to socket.

[2019-01-20 02:59:05 +0100] critical/ThreadPool: Exception thrown in event handler:

[2019-01-20 02:59:08 +0100] critical/Socket: send() failed with error code 32, "Broken pipe"

[2019-01-20 02:59:08 +0100] critical/LivestatusQuery: Cannot write to TCP socket.

[2019-01-20 02:59:08 +0100] critical/LivestatusQuery: Cannot write query response to socket.

[2019-01-20 02:59:08 +0100] critical/ThreadPool: Exception thrown in event handler:

[2019-01-20 03:02:10 +0100] critical/Socket: send() failed with error code 32, "Broken pipe"

[2019-01-20 03:02:10 +0100] critical/LivestatusQuery: Cannot write to TCP socket.

[2019-01-20 03:02:10 +0100] critical/LivestatusQuery: Cannot write query response to socket.

[2019-01-20 03:02:10 +0100] critical/ThreadPool: Exception thrown in event handler:

[2019-01-20 03:02:44 +0100] critical/Socket: send() failed with error code 32, "Broken pipe"

[2019-01-20 03:02:44 +0100] critical/LivestatusQuery: Cannot write to TCP socket.

[2019-01-20 03:02:44 +0100] critical/LivestatusQuery: Cannot write query response to socket.

[2019-01-20 03:02:44 +0100] critical/ThreadPool: Exception thrown in event handler:

[2019-01-20 03:02:53 +0100] critical/ApiListener: Client TLS handshake failed (from [satellite]:32903)

[2019-01-20 03:03:15 +0100] critical/Socket: send() failed with error code 32, "Broken pipe"

[2019-01-20 03:03:15 +0100] critical/LivestatusQuery: Cannot write to TCP socket.

[2019-01-20 03:03:15 +0100] critical/LivestatusQuery: Cannot write query response to socket.

[2019-01-20 03:03:15 +0100] critical/ThreadPool: Exception thrown in event handler:

[2019-01-20 03:03:21 +0100] critical/Socket: send() failed with error code 32, "Broken pipe"

[2019-01-20 03:03:21 +0100] critical/LivestatusQuery: Cannot write to TCP socket.

[2019-01-20 03:03:21 +0100] critical/LivestatusQuery: Cannot write query response to socket.

So this time correlates with the graph when the load started to increase!

But what is causing this? According to the errors in icinga2.log it must have something to do with Livestatus, which in this setup is serving as a local socket and only accessed by a Nagvis installation.

By looking for this error message, I came across an issue on GitHub which didn't really solve my load problem (in this case it was Thruk causing the errors) but the comments from dnsmichi pointed me in the right direction:

"If your client application does not close the socket, or wait for processing the response, such errors occur."

As in this case it can only be Nagvis accessing Livestatus, I checked the Apache access logs for Nagvis and narrowed it down to four internal IP addresses constantly accessing Nagvis. By identifying these hosts and the responsible teams one browser after another was closed until finally only one machine left was accessing Nagvis. And it turned out to be this single machine causing the issues. After a reboot of this particular machine the load of our Icinga2 server immediately went back to normal and no more errors appeared in the logs.

TL;DR: It's not always the application on the server to blame. Clients/browsers can be the source of a problem, too.

No comments yet.

AWS Android Ansible Apache Apple Atlassian BSD Backup Bash Bluecoat CMS Chef Cloud Coding Consul Containers CouchDB DB DNS Databases Docker ELK Elasticsearch Filebeat FreeBSD Galera Git GlusterFS Grafana Graphics HAProxy HTML Hacks Hardware Icinga Influx Internet Java KVM Kibana Kodi Kubernetes LVM LXC Linux Logstash Mac Macintosh Mail MariaDB Minio MongoDB Monitoring Multimedia MySQL NFS Nagios Network Nginx OSSEC OTRS Observability Office OpenSearch PHP Perl Personal PostgreSQL PowerDNS Proxmox Proxy Python Rancher Rant Redis Roundcube SSL Samba Seafile Security Shell SmartOS Solaris Surveillance Systemd TLS Tomcat Ubuntu Unix VMware Varnish Virtualization Windows Wireless Wordpress Wyse ZFS Zoneminder