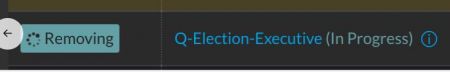

Today I came across an annoying bug in Rancher 1.6 (currently running 1.6.26) where a service was stuck in removing state:

All containers of this service were already deleted in the user interface. I verified this on the Docker hosts using "docker ps -a" and yes, all container instances were correctly removed. But the service in Rancher was still stuck in removing.

Furthermore in Admin -> Processes the service.remove processes (which seem to be cause of being stuck in that service removing in progress) never disappeared and were re-started every 2 minutes:

Although I'm not sure what caused this, the reason *might* be several actions happening on that particular service almost at the same time:

As you can see, while I attempted a service rollback, another user deleted the same service at (almost) the same time. I wouldn't be surprised if this has upset Rancher in such a way, that the "delete" task happened faster than the "rollback", causing the "rollback" to hiccup the system. The second "delete" attempt was to see if it would somehow "force" the removal, but it didn't work. So far to the theory (only someone from Rancher could eventually confirm this or better give the real reason what has happened), let's go solve this.

Because all attempts using the Rancher UI and API failed (the service staid in removing state), I began my research and came across the following issues:

The last one (issue 16694) basically describes the exact same bug and I also shared my information there. Unfortunately the issue was closed recently (23 days ago at the time of this writing), indicating:

"With the release of Rancher 2.0, development on v1.6 is only limited to critical bug fixes and security patches."

That's a shame because I consider this bug a critical bug. But as I didn't have time to wait for a potential bugfix, I had to go ahead and fix the problem anyway.

The other links above often mention SQL queries directly in Rancher's database to solve the issue, but nobody so far has really solved the problem. Let's try and solve this in the DB then!

Spoiler + Disclaimer: The following steps worked for me and solved the problem. However this should really be your last resort and you have to make sure all containers launched by this service are really stopped/removed. Also make sure there are no volume mounts (or unmounts in that case) hanging which could cause the service being stuck in removing (and waiting for a host). You do this at your own risk! (You made a backup, right?)

Let's take a closer look at the Rancher database. The following tables exist as of 1.6.26:

mysql> show tables;

+-----------------------------------------------+

| Tables_in_rancher |

+-----------------------------------------------+

| DATABASECHANGELOG |

| DATABASECHANGELOGLOCK |

| account |

| account_link |

| agent |

| agent_group |

| audit_log |

| auth_token |

| backup |

| backup_target |

| catalog |

| catalog_category |

| catalog_file |

| catalog_label |

| catalog_template |

| catalog_template_category |

| catalog_version |

| catalog_version_label |

| certificate |

| cluster |

| cluster_host_map |

| cluster_membership |

| config_item |

| config_item_status |

| container_event |

| credential |

| credential_instance_map |

| data |

| deployment_unit |

| dynamic_schema |

| dynamic_schema_role |

| environment |

| external_event |

| external_handler |

| external_handler_external_handler_process_map |

| external_handler_process |

| generic_object |

| global_load_balancer |

| healthcheck_instance |

| healthcheck_instance_host_map |

| host |

| host_ip_address_map |

| host_label_map |

| host_template |

| host_vnet_map |

| image |

| image_storage_pool_map |

| instance |

| instance_host_map |

| instance_label_map |

| instance_link |

| ip_address |

| ip_address_nic_map |

| ip_association |

| ip_pool |

| label |

| load_balancer |

| load_balancer_certificate_map |

| load_balancer_config |

| load_balancer_config_listener_map |

| load_balancer_host_map |

| load_balancer_listener |

| load_balancer_target |

| machine_driver |

| mount |

| network |

| network_driver |

| network_service |

| network_service_provider |

| network_service_provider_instance_map |

| nic |

| offering |

| physical_host |

| port |

| process_execution |

| process_instance |

| project_member |

| project_template |

| region |

| resource_pool |

| scheduled_upgrade |

| secret |

| service |

| service_consume_map |

| service_event |

| service_expose_map |

| service_index |

| service_log |

| setting |

| snapshot |

| snapshot_storage_pool_map |

| storage_driver |

| storage_pool |

| storage_pool_host_map |

| subnet |

| subnet_vnet_map |

| task |

| task_instance |

| ui_challenge |

| user_preference |

| vnet |

| volume |

| volume_storage_pool_map |

| volume_template |

| zone |

+-----------------------------------------------+

105 rows in set (0.03 sec)

In one of the links above, the table process_instance was mentioned. This table looks like this:

mysql> describe process_instance;

+---------------------------+--------------+------+-----+---------+----------------+

| Field | Type | Null | Key | Default | Extra |

+---------------------------+--------------+------+-----+---------+----------------+

| id | bigint(20) | NO | PRI | NULL | auto_increment |

| start_time | datetime | YES | MUL | NULL | |

| end_time | datetime | YES | MUL | NULL | |

| data | mediumtext | YES | | NULL | |

| priority | int(11) | YES | MUL | 0 | |

| process_name | varchar(128) | YES | | NULL | |

| resource_type | varchar(128) | YES | | NULL | |

| resource_id | varchar(128) | YES | | NULL | |

| result | varchar(128) | YES | | NULL | |

| exit_reason | varchar(128) | YES | | NULL | |

| phase | varchar(128) | YES | | NULL | |

| start_process_server_id | varchar(128) | YES | | NULL | |

| running_process_server_id | varchar(128) | YES | | NULL | |

| execution_count | bigint(20) | NO | | 0 | |

| run_after | datetime | YES | MUL | NULL | |

| account_id | bigint(20) | YES | MUL | NULL | |

+---------------------------+--------------+------+-----+---------+----------------+

16 rows in set (0.03 sec)

After checking some entries of this table, I figured that "still running" processes (as seen in the UI) don't have an end_time and I can also check for a specific process.name:

mysql> select * from process_instance where end_time is NULL and process_name = 'service.remove';

+----------+---------------------+----------+------+----------+----------------+---------------+-------------+--------+-------------+----------+-------------------------+---------------------------+-----------------+---------------------+------------+

| id | start_time | end_time | data | priority | process_name | resource_type | resource_id | result | exit_reason | phase | start_process_server_id | running_process_server_id | execution_count | run_after | account_id |

+----------+---------------------+----------+------+----------+----------------+---------------+-------------+--------+-------------+----------+-------------------------+---------------------------+-----------------+---------------------+------------+

| 16772517 | 2019-02-13 12:09:56 | NULL | {} | 0 | service.remove | service | 534 | NULL | NULL | HANDLERS | 172.17.0.2 | 172.17.0.2 | 51 | 2019-02-13 13:34:35 | 3480 |

| 16785969 | 2019-02-13 12:46:11 | NULL | {} | 0 | service.remove | service | 534 | NULL | NULL | HANDLERS | 172.17.0.2 | 172.17.0.2 | 33 | 2019-02-13 13:34:53 | 3480 |

+----------+---------------------+----------+------+----------+----------------+---------------+-------------+--------+-------------+----------+-------------------------+---------------------------+-----------------+---------------------+------------+

2 rows in set (0.03 sec)

Surprise surprise, these are the same entries as seen in the Rancher UI in Admin -> Processes!

From this query response (but also from the UI) we can grab the service's resource id (534). Let's take a closer look at the service table:

mysql> describe service;

+--------------------+---------------+------+-----+---------+----------------+

| Field | Type | Null | Key | Default | Extra |

+--------------------+---------------+------+-----+---------+----------------+

| id | bigint(20) | NO | PRI | NULL | auto_increment |

| name | varchar(255) | YES | MUL | NULL | |

| account_id | bigint(20) | YES | MUL | NULL | |

| kind | varchar(255) | NO | | NULL | |

| uuid | varchar(128) | NO | UNI | NULL | |

| description | varchar(1024) | YES | | NULL | |

| state | varchar(128) | NO | MUL | NULL | |

| created | datetime | YES | | NULL | |

| removed | datetime | YES | MUL | NULL | |

| remove_time | datetime | YES | MUL | NULL | |

| data | mediumtext | YES | | NULL | |

| environment_id | bigint(20) | YES | MUL | NULL | |

| vip | varchar(255) | YES | | NULL | |

| create_index | bigint(20) | YES | | NULL | |

| selector_link | varchar(4096) | YES | | NULL | |

| selector_container | varchar(4096) | YES | | NULL | |

| external_id | varchar(255) | YES | MUL | NULL | |

| health_state | varchar(128) | YES | | NULL | |

| system | bit(1) | NO | | b'0' | |

| skip | bit(1) | NO | | b'0' | |

+--------------------+---------------+------+-----+---------+----------------+

20 rows in set (0.03 sec)

Each service has a unique id, so let's check if we can find a service with id 534 as seen before:

mysql> select id,name,account_id,kind,uuid,description,state,created,removed,remove_time,environment_id,external_id,health_state from service where id = 534;

+-----+----------------------+------------+---------+--------------------------------------+-------------+----------+---------------------+---------+-------------+----------------+-------------+--------------+

| id | name | account_id | kind | uuid | description | state | created | removed | remove_time | environment_id | external_id | health_state |

+-----+----------------------+------------+---------+--------------------------------------+-------------+----------+---------------------+---------+-------------+----------------+-------------+--------------+

| 534 | Q-Election-Executive | 3480 | service | 710e9254-d03d-4373-b37b-4f7ef854e2d4 | NULL | removing | 2018-02-09 13:13:21 | NULL | NULL | 74 | NULL | unhealthy |

+-----+----------------------+------------+---------+--------------------------------------+-------------+----------+---------------------+---------+-------------+----------------+-------------+--------------+

1 row in set (0.03 sec)

Note: Basically I just removed the "data" column from the SELECT statement as it contained some sensitive data.

So far so good, this is definitely our service stuck in removing!

What about services which were successfully removed so far? How do they look?

mysql> select id,name,account_id,kind,state,removed,remove_time,health_state from service where state = 'removed';

+------+-----------------------------------+------------+---------------------+---------+---------------------+---------------------+--------------+

| id | name | account_id | kind | state | removed | remove_time | health_state |

+------+-----------------------------------+------------+---------------------+---------+---------------------+---------------------+--------------+

| 49 | Loadbalancer-Spellchecker-Staging | 79 | loadBalancerService | removed | 2017-06-27 14:18:15 | 2017-06-27 14:26:27 | unhealthy |

| 57 | rancher-compose-executor | 3078 | service | removed | 2016-12-15 14:00:44 | 2016-12-15 14:09:54 | unhealthy |

| 61 | load-balancer-swarm | 3078 | loadBalancerService | removed | 2016-12-15 14:00:45 | 2016-12-15 14:02:38 | unhealthy |

| 62 | load-balancer | 3078 | loadBalancerService | removed | 2016-12-15 14:00:45 | 2016-12-15 14:08:16 | unhealthy |

| 146 | Loadbalancer-Intern | 79 | loadBalancerService | removed | 2017-02-01 12:03:34 | 2017-02-01 12:10:31 | unhealthy |

| 231 | Departments-Tool | 5160 | service | removed | 2019-02-12 11:11:40 | 2019-02-12 11:16:10 | unhealthy |

| 403 | Departments-Tool-Regio | 5160 | service | removed | 2019-02-12 11:11:37 | 2019-02-12 11:13:35 | unhealthy |

| 771 | Flugplan | 5160 | service | removed | 2018-11-27 06:48:53 | 2018-11-27 06:49:11 | unhealthy |

| 891 | st-1830-server | 59885 | service | removed | 2019-01-14 16:20:16 | 2019-01-14 16:30:05 | unhealthy |

| 917 | st-1830-server | 3480 | service | removed | 2018-12-10 16:41:23 | 2018-12-10 16:41:25 | healthy |

| 933 | Kenny-Varnish | 12 | service | removed | 2018-12-14 13:03:16 | 2018-12-14 13:04:49 | unhealthy |

| 941 | Kenny-Varnish | 79 | service | removed | 2018-12-14 13:03:35 | 2018-12-14 13:06:28 | unhealthy |

| 944 | Kenny-Varnish | 80 | service | removed | 2018-12-14 13:03:44 | 2018-12-14 13:05:57 | unhealthy |

| 1036 | KennyApp | 8735 | service | removed | 2018-11-30 15:14:13 | 2018-11-30 15:14:45 | healthy |

| 1043 | KennyApp | 60825 | service | removed | 2019-02-13 11:33:31 | 2019-02-13 11:42:02 | healthy |

| 1094 | Thunder-Judi | 5161 | service | removed | 2019-02-12 11:03:29 | 2019-02-12 11:09:40 | unhealthy |

| 1099 | claudiotest | 59885 | service | removed | 2019-02-13 13:20:59 | 2019-02-13 13:28:41 | healthy |

+------+-----------------------------------+------------+---------------------+---------+---------------------+---------------------+--------------+

17 rows in set (0.03 sec)

Obviously the state is set to "removed" (not removing) and the column removed and remove_time contain a date. The health_state doesn't seem to matter here.

Let's try and manually set our confused service to state "removed", including 'removed' and 'remove_time' timestamps:

mysql> UPDATE service SET state = 'removed', removed = '2019-02-13 12:50:00', remove_time = '2019-02-13 12:48:00' WHERE id = 534;

Query OK, 1 row affected (0.06 sec)

Rows matched: 1 Changed: 1 Warnings: 0

Let's take a look at our service again:

mysql> select id,name,account_id,kind,uuid,description,state,created,removed,remove_time,environment_id,external_id,health_state from service where id = 534;

+-----+----------------------+------------+---------+--------------------------------------+-------------+---------+---------------------+---------------------+---------------------+----------------+-------------+--------------+

| id | name | account_id | kind | uuid | description | state | created | removed | remove_time | environment_id | external_id | health_state |

+-----+----------------------+------------+---------+--------------------------------------+-------------+---------+---------------------+---------------------+---------------------+----------------+-------------+--------------+

| 534 | Q-Election-Executive | 3480 | service | 710e9254-d03d-4373-b37b-4f7ef854e2d4 | NULL | removed | 2018-02-09 13:13:21 | 2019-02-13 12:50:00 | 2019-02-13 12:48:00 | 74 | NULL | unhealthy |

+-----+----------------------+------------+---------+--------------------------------------+-------------+---------+---------------------+---------------------+---------------------+----------------+-------------+--------------+

1 row in set (0.03 sec)

According to the Rancher database, this service is now properly removed. What about the Rancher UI?

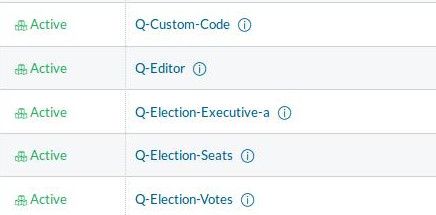

The service Q-Election-Executive, which was stuck in "Removing" has disappeared from the list:

Note Q-Election-Executive-a was a clone of the original service as a workaround. But as the original service was still in removing status, the clone could not be renamed.

And also the service.remove processes have disappeared from the "Running" tab:

Now the cloned service could be renamed to run under the original service name again. Yes!

Leons Petrazickis from Toronto, ON wrote on Apr 12th, 2019:

Thanks! This also works to fix haproxy load balancers that are stuck in Updating-Active. Instead of setting state to removed, set it to active:

update service set state = 'active' where id = ...;

AWS Android Ansible Apache Apple Atlassian BSD Backup Bash Bluecoat CMS Chef Cloud Coding Consul Containers CouchDB DB DNS Databases Docker ELK Elasticsearch Filebeat FreeBSD Galera Git GlusterFS Grafana Graphics HAProxy HTML Hacks Hardware Icinga Influx Internet Java KVM Kibana Kodi Kubernetes LVM LXC Linux Logstash Mac Macintosh Mail MariaDB Minio MongoDB Monitoring Multimedia MySQL NFS Nagios Network Nginx OSSEC OTRS Observability Office OpenSearch PHP Perl Personal PostgreSQL PowerDNS Proxmox Proxy Python Rancher Rant Redis Roundcube SSL Samba Seafile Security Shell SmartOS Solaris Surveillance Systemd TLS Tomcat Ubuntu Unix VMware Varnish Virtualization Windows Wireless Wordpress Wyse ZFS Zoneminder